1) Learning Objectives

After reading this chapter, the reader should understand:

the regulatory basis for practices in EDC study implementation and start-up

similarities and differences between paper and web-based data collection

basic and common features of fields, forms, and form groupings in EDC systems

common dynamic workflow and data flow options within web-based EDC systems

special considerations for data processing when using web-based EDC

common steps in system set-up and testing

methods for managing system access and privileges

common practices for training clinical investigational sites in the use of EDC

considerations and business models for using vendor-hosted EDC systems

2) Introduction

In the first clinical studies, data were collected on paper forms called Case Report Forms or CRFs. The structured forms served to assure complete and consistent data collection for each study participant. Since the early 1990s, we have documented best practices for designing paper CRFs.1,2,3,4,5 Design considerations focused on graphical layout within the confines of a paper page CRF and visual cues to aid the form filler such as use of boxes versus circles to indicate ‘check all that apply’ versus ‘check one’. Instructions were printed on the forms. There were rules governing the type of writing instruments such as black ballpoint pens. There were rules for correcting data such as use of a single line cross out and providing the corrected value, the date, and the initials of the person making the change. Yet there was nothing to facilitate the workflow of data collection or to prevent writing discrepant or errant values on the paper form.

In the days of paper-based data collection, how the data were entered into electronic format, typically in a Clinical Database Management System (CDMS), was not the most important consideration. Clinical study data were usually double data entered. Entry operators were trained in handling exceptions and allowable corrections. The CDMS partially automated the workflow of data entry, integration of external data, cleaning and coding, and provided automation for tracking data entry, discrepancy identification, and discrepancy resolution. However, the benefits of these advances were largely limited to in-house data management groups in Contract Research Organizations (CRO) or Sponsor organizations.

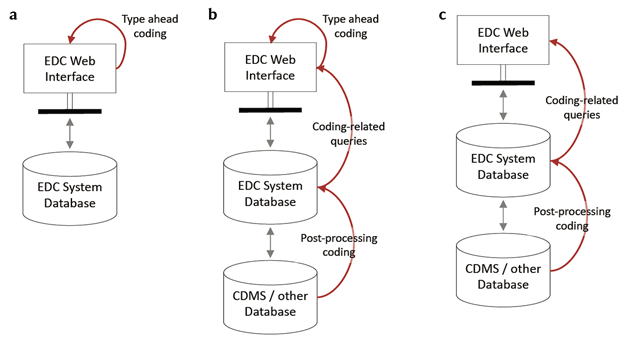

At the turn of the century, technology leveraging the internet such as web-based EDC systems opened the possibility of extending the benefits of information systems in clinical research to multiple roles on research teams, to investigational sites, and even to study participants. Commercial and academic advances were sporadic.6 EDC systems shifted the work of data entry to clinical investigational sites and contained less data management functionality than the CDMSs of the time. For example, integrating external data and medical coding were challenging or altogether not supported in early EDC systems.

There are four ways through which information systems can add value to organizations: automation, connectivity, decision support, and data mining.7 Examples include (1) automating workflow such as submitting a “low drug supply” alert to the research pharmacy when drug supply is low, (2) providing decision support such as flagging potential toxicities or making real-time status and exception data available to support trial management, (3) providing access to data from other systems through automating pre-population of data from other information systems such as an interface with an eConsent system that registers the participant in the EDC system as enrolled when the eConsent is completed or exchange of participant enrollment data with central or site-based Clinical Trial Management Systems (CTMSs), and (4) data mining through use of study data to identify factors predictive of missed visits or protocol violations. Today’s EDC functionality offers some of this potential. However, achieving broad benefit across clinical study design, conduct, and reporting for clinical investigators, research teams, and study participants is dependent on available EDC functionality and how it is leveraged for a clinical study and how the data are used for better decision-making within and across studies.6,8

At the same time, leveraging advanced functionality lies in the balance between added value and costs. The most recent industry survey reports an average of 68.3 days to build and release a study database, 8.1 days between patient visits and entry of data from the visits into the EDC system, and 36.3 days between last patient last visit and database lock.9 These cycle time durations are longer and more variable than those observed ten years ago.9,10 This chapter focuses on realizing value from EDC functionality through design and implementation of workflow and data flow within clinical studies.

3) Scope

This chapter provides information on the design, development, and implementation concepts related to setting-up a study (sometimes called an application) in an EDC system. Practices, procedures, and recommendations are proposed for clinical data managers to design and implement EDC facilitated workflow and data flow for automation, connectivity, decision support, and data mining within and across clinical studies.

While many of the tasks described in this chapter may be joint responsibilities between different functional areas of an organization, those tasks associated with the collection, processing and storage of data are covered here. These responsibilities are the core of the Clinical Data Management profession. As such, the clinical data manager is usually responsible for the overall implementation of any study application.

Recommendations for EDC system selection were covered in the chapter “Electronic Data Capture – Selecting an EDC System”. Recommendations for study conduct and study closeout using EDC are addressed in the chapter “Electronic Data Capture – Study Conduct, Maintenance and Closeout.”

4) Minimum Standards

As a mode of data collection and management in clinical studies, EDC systems have the potential to impact human subject protection as well as the reliability of trial results. Regulation and guidance are increasingly vocal on the topic. The E6(R2) Good Clinical Practice: Integrated Addendum to ICH E6(R1) contains several passages particularly relevant to use of EDC systems in clinical studies.

Section 2.8 “Each individual involved in conducting a trial should be qualified by education, training, and experience to perform his or her respective tasks.”11

Section 2.10, “All clinical trial information should be recorded, handled, and stored in a way that allows its accurate reporting, interpretation, and verification.”11

Section 5.0 states that “The methods used to assure and control the quality of the trial should be proportionate to the risks inherent in the trial and the importance of the information collected.”11

Section 5.1.1 states that “The sponsor is responsible for implementing and maintaining quality assurance and quality control systems with written SOPs to ensure that trials are conducted and data are generated, documented (recorded), and reported in compliance with the protocol, GCP, and the applicable regulatory requirement(s).” Additionally, Section 5.1.3 states that “Quality control should be applied to each stage of data handling to ensure that all data are reliable and have been processed correctly.”11

Section 5.5.1, states that “The sponsor should utilize appropriately qualified individuals to supervise the overall conduct of the trial, to handle the data, to verify the data, to conduct the statistical analyses, and to prepare the trial reports.”11

Section 5.5.3 states that “When using electronic trial data handling and/or remote electronic trial data systems, the sponsor should: a) Ensure and document that the electronic data processing system(s) conforms to the sponsor’s established requirements for completeness, accuracy, reliability, and consistent intended performance (i.e., validation).”11

Section 5.5.3 addendum states that “The sponsor should base their approach to validation of such systems on a risk assessment that takes into consideration the intended use of the system and the potential of the system to affect human subject protection and reliability of trial results.” and in the addendum b) states the requirement, “Maintains SOPs for using these systems.”11

Section 5.5.3 addendum c-h introductory statement states that “The SOPs should cover system setup, installation, and use. The SOPs should describe system validation and functionality testing, data collection and handling, system maintenance, system security measures, change control, data backup, recovery, contingency planning, and decommissioning.”11

Section 5.5.4 under Trial Management, Data Handling and Recordkeeping, states that “If data are transformed during processing, it should always be possible to compare the original data and observations with the processed data.”11

Similar to ICH E6(R2), Title 21 CFR Part 11 also states requirements for traceability, training, and qualification of personnel, and validation of computer systems used in clinical trials. Requirements in 21 CFR Part 11 Subpart B are stated as controls for closed systems (21 CFR Part 11 Sec. 11.10), controls for open systems (21 CFR Part 11 Sec. 11.30), signature manifestations (21 CFR Part 11 Sec. 11.50), and signature/record linking (21 CFR Part 11 Sec. 11.70). Requirements for electronic signatures are stated in 21 CFR Part 11 Subpart C.12

Recommendations in Section A of the 2007 Guidance for Industry Computerized Systems Used in Clinical Investigations (CSUCI) state that, “Each specific study protocol should identify each step at which a computerized system will be used to create, modify, maintain, archive, retrieve, or transmit source data.”13

Section B of the CSUCI guidance states expectations with respect to Standard Operating Procedures (SOPs): “There should be specific procedures and controls in place when using computerized systems to create, modify, maintain, or transmit electronic records, including when collecting source data at clinical trial sites” and “the SOPs should be made available for use by personnel and for inspection by FDA.”13

Section C reiterates document retention requirements under 21 CFR 312.62, 511.1(b)(7)(ii) and 812.140. Further, section C of CSUCI goes on to state that “When source data are transmitted from one system to another …, or entered directly into a remote computerized system … or an electrocardiogram at the clinical site is transmitted to the sponsor’s computerized system, a copy of the data should be maintained at another location, typically at the clinical site but possibly at some other designated site.” And that “copies should be made contemporaneously with data entry and should be preserved in an appropriate format, such as XML, PDF or paper formats.”13

Section D further specifies 21 CFR Part 11 principles with respect to limiting access to CSUCT (Computer Systems Used in Clinical Trials), audit trails, and date and time stamps.13

Section E likewise provides further detail regarding expectations for security, e.g., “should maintain a cumulative record that indicates, for any point in time, the names of authorized personnel, their titles, and a description of their access privileges” and recommends that, “controls be implemented to prevent, detect, and mitigate effects of computer viruses, worms, or other potentially harmful software code on study data and software.”13

Section F addresses direct entry of data including automation and data standardization; data attribution and traceability including explanation of, “how source data were obtained and managed, and how electronic records were used to capture data”; system documentation that, identifies software and hardware used to, “create, modify, maintain, archive, retrieve, or transmit clinical data”; system controls including storage, back-up and recovery of data; and change control of computerized systems.13

Section G elaborates on training of personnel as stated in 21 CFR 11.10(i) that those who, “develop, maintain, or use computerized systems have the education, training and experience necessary to perform their assigned tasks”, that training be conducted with frequency sufficient to, “ensure familiarity with the computerized system and with any changes to the system during the course of the study” and that, “education, training, and experience be documented.”13

The Medicines & Healthcare products Regulatory Agency (MHRA) ‘GXP’ Data Integrity Guidance and Definitions covers principles of data integrity, establishing data criticality and inherent risk, designing systems and processes to assure data integrity, and it also covers the following topics particularly relevant to EDC:

Similar to ICH E2(R2), MHRA Section 2.6 states, “Users of this guidance need to understand their data processes (as a lifecycle) to identify data with the greatest GXP impact. From that, the identification of the most effective and efficient risk-based control and review of the data can be determined and implemented.”14

Section 6.2, Raw Data states, “Raw data must permit full reconstruction of the activities.”14

Section 6.7 Recording and Collection of Data states that “Organisations should have an appropriate level of process understanding and technical knowledge of systems used for data collection and recording, including their capabilities, limitations, and vulnerabilities,” and that the “selected method [of data collection and recording] should ensure that data of appropriate accuracy, completeness, content and meaning are collected and retained for their intended use.”14

Section 6.9 Data Processing states that “There should be adequate traceability of any user-defined parameters used within data processing activities to the raw data, including attribution to who performed the activity.” And that, “Audit trails and retained records should allow reconstruction of all data processing activities….”14

The General Principles of Software Validation; Final Guidance for Industry and FDA Staff (2002) provides guidance regarding documentation expected of software utilized in a clinical trial.14

Section 2.4 “All production and/or quality system software, even if purchased off-the-shelf, should have documented requirements that fully define its intended use, and information against which testing results and other evidence can be compared, to show that the software is validated for its intended use.”14

Section 4.7 (Software Validation After a Change), “Whenever software is changed, a validation analysis should be conducted not just for validation of the individual change, but also to determine the extent and impact of that change on the entire software system.”14

Section 5.2.2 “Software requirement specifications should identify clearly the potential hazards that can result from a software failure in the system as well as any safety requirements to be implemented in software.”14

Good Manufacturing Practice Medicinal Products for Human and Veterinary Use (Volume 4, Annex 11): Computerised Systems (2011) provides the following guidelines when using computerized systems in clinical trials. Though the guidance is in the context of manufacturing, it is included to emphasize the consistency of thinking and guidance relevant to use of computer systems in clinical trials across the regulatory landscape.15

Section 1.0 “Risk management should be applied throughout the lifecycle of the computerised system taking into account patient safety, data integrity and product quality. As part of a risk management system, decisions on the extent of validation and data integrity controls should be based on a justified and documented risk assessment of the computerised system.”15

Section 4.2 states that, “Validation documentation should include change control records (if applicable) and reports on any deviations observed during the validation process.”15

Section 4.5 states that, “The regulated user should take all reasonable steps, to ensure that the system has been developed in accordance with an appropriate quality management system.”15

Section 7.1 states that, “Data should be secured by both physical and electronic means against damage. Stored data should be checked for accessibility, readability, and accuracy. Access to data should be ensured throughout the retention period.”15

Section 7.2 states that, “Regular back-ups of all relevant data should be done. Integrity and accuracy of backup data and the ability to restore the data should be checked during validation and monitored periodically.”15

Section 9.0 states that, “Consideration should be given, based on a risk assessment, to building into the system the creation of a record of all GMP-relevant changes and deletions (a system generated “audit trail”). For change or deletion of GMP-relevant data the reason should be documented. Audit trails need to be available and convertible to a generally intelligible form and regularly reviewed.”15

Section 10.0 states that, “Any changes to a computerised system including system configurations should only be made in a controlled manner in accordance with a defined procedure.”15

GAMP 5: A Risk-based Approach to Compliant GxP Computerized Systems (2008) suggests scaling activities related to computerized systems with a focus on patient safety, product quality, and data integrity. It provides the following guidelines relevant to GxP regulated computerized systems including systems used to collect and process clinical trial data:

Section 2.1.1 states that “Efforts to ensure fitness for intended use should focus on those aspects that are critical to patient safety, product quality, and data integrity. These critical aspects should be identified, specified, and verified.”16

Section 4.2 states that, “The rigor of traceability activities and the extent of documentation should be based on risk, complexity, and novelty, for example a non-configured product may require traceability only between requirements and testing.”16

Section 4.2 further states that, “The documentation or process used to achieve traceability should be documented and approved during the planning stage and should be an integrated part of the complete life cycle.”16

Section 4.3.4.1 states that, “Change management is a critical activity that is fundamental to maintaining the compliant status of systems and processes. All changes that are proposed during the operational phase of a computerized system, whether related to software (including middleware), hardware, infrastructure, or use of the system, should be subject to a formal change control process (see Appendix 07 for guidance on replacements). This process should ensure that proposed changes are appropriately reviewed to assess impact and risk of implementing the change. The process should ensure that changes are suitably evaluated, authorized, documented, tested, and approved before implementation, and subsequently closed.”16

Section 4.3.6.1 states that, “Processes and procedures should be established to ensure that backup copies of software, records, and data are made, maintained, and retained for a defined period within safe and secure areas.”16

Section 4.3.6.2 states that, “Critical business processes and systems supporting these processes should be identified and the risks to each assessed. Plans should be established and exercised to ensure the timely and effective resumption of these critical business processes and systems.”16

Section 5.3.1.1 states that, “The initial risk assessment should include a decision on whether the system is GxP regulated (i.e., a GxP assessment). If so, the specific regulations should be listed, and to which parts of the system they are applicable. For similar systems, and to avoid unnecessary work, it may be appropriate to base the GxP assessment on the results of a previous assessment, provided the regulated company has an appropriate established procedure.”16

Section 5.3.1.2 states that, “The initial risk assessment should determine the overall impact that the computerized system may have on patient safety, product quality, and data integrity due to its role within the business processes. This should take into account both the complexity of the process, and the complexity, novelty, and use of the system.”16

The FDA guidance, Use of Electronic Health Record (EHR) Data in Clinical Investigations, emphasizes that data sources should be documented, and that source data and documents be retained in compliance with 21 CFR 312.62(c) and 812.140(d).17

Section V.A states that “Sponsors should include in their data management plan a list of EHR systems used by each clinical investigation site in the clinical investigation” and that “Sponsors should document the manufacturer, model number, and version number of the EHR system and whether the EHR system is certified by the Office of the National Coordinator for Health Information Technology (ONC).”17

Section V.I states that “Clinical investigators must retain all paper and electronic source documents (e.g., originals or certified copies) and records as required to be maintained in compliance with 21 CFR 312.62(c) and 812.140(d).”17

Similarly, the FDA’s guidance, Electronic Source Data Used in Clinical Investigations recommends that all data sources at each site be identified.18

Section III.A states that each data element should be associated with an authorized data originator and goes on to state, “A list of all authorized data originators (i.e., persons, systems, devices, and instruments) should be developed and maintained by the sponsor and made available at each clinical site. In the case of electronic, patient-reported outcome measures, the subject (e.g., unique subject identifier) should be listed as the originator.”18

Section III.A.3 elaborates on Title 21 CFR Part 11 and states, “The eCRF should include the capability to record who entered or generated the data [i.e., the originator] and when it was entered or generated.” and “Changes to the data must not obscure the original entry, and must record who made the change, when, and why.”18

Section III.A.5 states that the FDA encourages “the use of electronic prompts, flags, and data quality checks in the eCRF to minimize errors and omissions during data entry.”18

Section III.C states that “The clinical investigator(s) should retain control of the records (i.e., completed and signed eCRF or certified copy of the eCRF).” In other words, eSource data cannot be in sole control of the sponsor.18

As such, we state the following minimum standards for the study implementation and start-up using EDC systems.

5) Best Practices

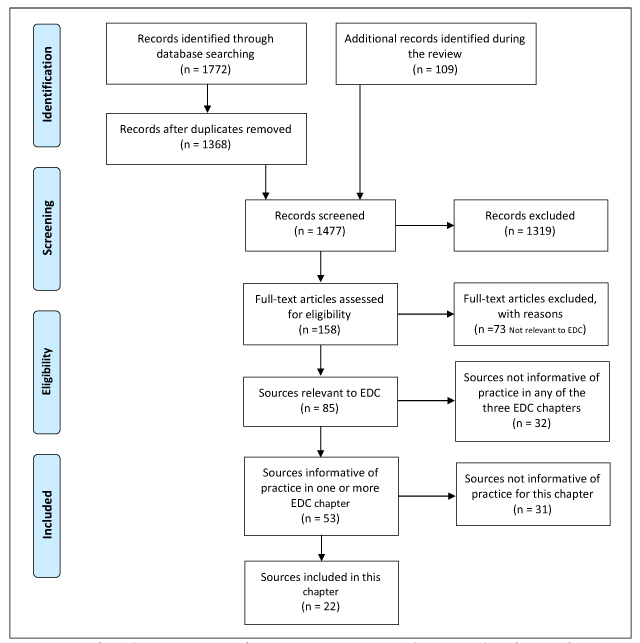

Best practices were identified by both the review and the writing group. Best practices are not required by regulation or recommended by guidance, but do have supporting evidence either from the literature or consensus of the writing group. As such best practices, like all assertions in GCDMP chapters, have a literature citation where available and are always tagged with a roman numeral indicating the strength of evidence supporting the recommendation. Levels of Evidence are outlined in Table 3.

Minimum Standards.

| 1. | Document requirements for all aspects of the eCRF and data collected, processed, or stored by or in the EDC system. |

| 2. | Document sources of data at each site including explicit statement that the EDC system is used as the source where this is the case. |

| 3. | Ensure data values can be traced from the data origination through all changes and that the audit trail of all data changes is immutable, preserved, and available for review. |

| 4. | Use electronic “prompts, flags, and data quality checks in the eCRF to minimize errors and omissions during data entry.”18 |

| 5. | Establish and follow SOPs to ensure that testing, including user acceptance testing (UAT) of the study-specific EDC application, is commensurate with the assessed risk. |

| 6. | Establish and follow SOPs to ensure that testing is completed and documented prior to implementation and deployment to sites. |

| 7. | Establish and follow SOPs to ensure that all users have documented training prior to using the system. |

| 8. | Establish and follow SOPs to limit data access and permissions to authorized individuals and to document data access and permissions. |

| 9. | Establish and follow SOPs for the process of setting up an EDC system for a study. |

Best Practices.

| 1. | Develop eCRFs with cross-functional teams including but not limited to clinical operations, monitoring, clinical data management, statistics, regulatory affairs, quality assurance, pharmacovigilance/drug safety, and medical leadership. [VI] |

| 2. | Leverage EDC functionality to facilitate the work of sites, monitors, and other study team members to the extent the value outweighs the costs. [III]7,8,19,20 |

| 3. | Design processes to identify and correct data discrepancies at the earliest possible point in study processes. [III]8,20,21,22,23,24 |

| 4. | Ensure adequate attention to the collection, processing, and routing of safety data either through or facilitated by the EDC system. [VI]8 |

| 5. | Warrant the eCRF design is intuitive and user-friendly for sites, monitors, and other study team members and that instructions and references are readily available. [VI] |

| 6. | Help should be available during work days and times of all sites. [VI] |

| 7. | In limited cases the study team may provide data collection forms, instructions and help in local languages. Help should support the number of languages including local dialects needed to communicate with all EDC system users. [VI] |

| 8. | Ensure eCRFs do not introduce bias into the data by containing leading questions or forcing responses. [VI] |

| 9. | Ensure that comprehensive help (written, live, or otherwise) including eCRF entry guidelines, study data definition, and dynamic functionality behavior for all fields, forms, and visits are up-to-date and readily available to sites. [VI]19 |

| 10. | Consider use of available data standards. [VI]8 |

| 11. | Where data standards are used, ensure that the eCRF conforms to the standards so that detail (information content) is not lost in downstream mapping to such standards for submission or data sharing. [VI]8 |

| 12. | The EDC system and all intended data operations such as edit checks and dynamic behavior should be in production prior to enrollment of the first patient. [III]8,9,19,20,21,23 |

Grading Criteria.

| Evidence Level | Evidence Grading Criteria |

|---|---|

| I | Large controlled experiments, meta, or pooled analysis of controlled experiments, regulation or regulatory guidance |

| II | Small controlled experiments with unclear results |

| III | Reviews or synthesis of the empirical literature |

| IV | Observational studies with a comparison group |

| V | Observational studies including demonstration projects and case studies with no control |

| VI | Consensus of the writing group including GCDMP Executive Committee and public comment process |

| VII | Opinion papers |

6) What it Means to Design a Study Application Within an EDC System

Filling in an electronic form is quite different from completing a paper form, where the advantages of EDC technology are leveraged. For example, in an interventional cardiology study, if hemoglobin or hematocrit values below a threshold are entered, a question or form asking about transfusions may be generated as well as assessment information for peri-procedural bleeding. At the same time, an email notification may automatically be sent to the study safety desk. On-screen checks are run to flag out-of-range and logically inconsistent lab values, and the investigator’s assessment of relatedness to the study drug may be required; in this case a discrepant data flag is attached to the form until the investigator’s assessment of relatedness is populated. In this simple scenario, the EDC system added new fields or forms relevant to the patient, provided greater control of data entered in the form, facilitated study workflow, automated tracking of discrepant data, and decreased the gap between the site and central team managing the study – all in real-time. This functionality is not available when collecting data on paper forms and, due to the time-lag in processing and entering paper forms, immediate action by the central study team is not possible. Thus, in this simple example, the EDC system as implemented for the study would provide significant value over paper data collection through automation, connectivity, and decision support.

EDC systems offer the opportunity to define and enforce workflow of data collection in addition to the data to be collected. While the extent to which workflow and data flow can be automated within an EDC system depends on the functionality offered by each system, the basic functionality described in the interventional cardiology study example is available in most EDC software.

It is the increase in connectivity, workflow, and data flow automation and decision support that makes use of EDC different from collecting data on paper forms. An EDC-enabled study goes beyond implementation of new technology. Gaining value from EDC requires re-engineering processes and improving decision-making during study conduct [III].8,19,20,25,26,27 The need for site-facing computer systems to provide benefit to sites in order to garner site support has been articulated since the early days of EDC predecessors.6 The mechanisms of site benefit include the aforementioned ways in which information systems add value to organizations.7 For this reason, setting-up a study in an EDC system is often referred to as “building a study” rather than creating a study database. Using EDC means that for each data value collected on an eCRF, there is an additional choice of what if anything the system should do in response to entry of each of the possible entry values. In today’s EDC systems, there are often many options. Thus, the data manager should have a thorough understanding of workflow and data flow automation and decision support in addition to EDC system functionality to optimize data-related aspects of study conduct. [VI]

How a human works with a paper form and writing instrument is different from working with a computerized system and related input devices. Because EDC is often more invasive than paper data collection in individual working practices and institutional process flow, these interactions become important in design, testing, and implementation. While professionals field-tested paper forms prior to their use on studies, true human centered design, usability testing, or implementation monitoring are often appropriate if not necessary in use of EDC. Further, because EDC systems are used by or touch many roles on study teams, training on use of the system for the study is usually broader than in the past.

7) eCRF Design

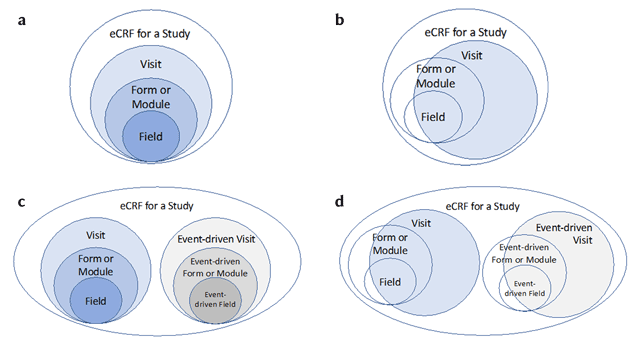

Most study builds start with the static aspects of the electronic CRFs (eCRFs), i.e., the data elements or fields to be collected, their definition, valid response values, layout on the screen, and their organization into forms and visits. EDC systems facilitate different ways of grouping data to be collected or displayed on eCRFs. Such groupings include grouping of data collection fields into modules, modules into forms, and forms into visits similar to such groupings on paper data collection forms. (See Figure 1) Likewise, the fields in modules may differ from form to form and the contents of forms may differ from visit to visit. Just as with paper data collection forms, such module-to-module, form-to-form, or visit-to-visit variability increases the development cost and must be weighed against the value added. [VI] In EDC systems, grouping of data elements into modules, forms, or visits may be part of data definition and affect data storage as well as layout. Thus, eCRF design requires a thorough understanding of the relationship between data definition, grouping, layout, and data storage structure in the specific EDC system. [VI]

Varieties of Alignment of Fields, Forms, and Visits Commonly Supported by EDC Systems. a: EDC systems often reinforce an alignment between fields, forms (or modules), and visits. Sometimes it is a strict hierarchy. b: Some systems may not require fields, forms, and visits to be associated in a strict hierarchy and may support collection of data outside the study visit structure; i.e., forms that are not associated with visits such as log-based forms that span the study like concomitant medication logs or study withdraw forms. c: Some EDC systems may support event-driven forms and may also have mechanisms or requirements for associating them with visits, other forms, or fields. Examples include repeat assessments or unscheduled visits. d: Some EDC systems may support both event-driven forms and visits as well as forms that occur outside of a visit context. Examples include event-driven forms and log-based forms that span the study such as a log of protocol violations or a log of clinical events.

When designing an eCRF, it is often not known what type of computer(s) will be used for data entry by the end-user. The size and type of screen and input devices such as keyboard and mouse can easily differ across users and at the same time can affect the data entry process. For example, fields “below the scroll” may be more easily missed. Many EDC systems have the capability of allowing for longer or wider forms, as well as multiple ‘forms’ within one eCRF presentation. However, it is good practice to take into consideration the smallest screen available on the market when deciding grouping and lay-out of data fields on eCRF screens even if doing so may reduce the amount of data collected on a single eCRF screen. [VI] Static aspects of eCRF design that are not specific to EDC and are covered in the CRF design chapter of the GCDMP. EDC-specific static aspects of eCRF design are covered in subsequent sections of this chapter.

Most EDC systems have functionality that can be leveraged to guide site staff in data entry and actions to be taken in response to entered data. How the EDC system responds to entered data and other user actions within the system is called dynamic behavior because the system behavior differs based on the user input or action. EDC functionality for dynamic behavior is used to automate or otherwise constrain workflow and data flow in and facilitated by the EDC system. Automation, such as sending a notification email to the safety desk when an adverse event indicated as serious is entered, is a form of dynamic behavior.

Automating workflow and data flow is a major way that EDC systems can provide value to users and organizations. Functionality for automation should be exploited to the extent helpful to users and to the extent practical within a study [VI].7,8 However, workflow and data flow automation requires a significant amount of experience. Design of new automation, i.e., not yet tried by the Data Manager or organization, benefits greatly from application of human-centered design principles such as early and iterative involvement of users in testing dynamic behavior. Automation constrains the options available to system users. Limiting flexibility without careful consideration of all eventual variation that may occur can frustrate users and cause delays when the unanticipated exceptions occur. In a 2003 publication, Kush et al. give an example of an EDC system that automated locking of patient data after internal review such that the sites could no longer make changes. When legitimate changes occurred after internal review, sites were required to call in and request the data to be unlocked. To work around the cumbersome process, sites entered changes into comment fields, which required extensive sorting out prior to analysis.8 Automation and other constraints that affect workflow and data flow, add tremendous value when they work as intended and gracefully handle exceptions. On the other-hand, frequent exceptions or exceptions that are not gracefully handled quickly erode the value gained from the automation. Thus, any automation such as alerts, routing, state changes, or triggering dynamic fields, forms, or visits should be carefully designed and user-tested. [VI]

Automation and other constraints on eCRFs also have the potential to introduce bias into the data by pre-populating or forcing responses. Where such bias is possible, forego the planned automation or constraint. [VI] Statistical review and approval of automation and constraints will help identify potential constraint-caused bias. Data surveillance may be effective in detecting some instances of bias in key safety and efficacy parameters.

Dynamic behavior can be triggered by an individual field or by some relationship between multiple fields or forms. Further, dynamic behavior may act on an individual field or multiple fields. Consider handling of dates. A site in Europe may prefer entering dates using the “dd-mmm-yyyy” format, whereas a site in the United States may prefer using “mmm-dd-yyyy”. Some EDC systems allow site or user-specific settings so that a user can enter dates in their preferred format and the system converts and stores the data in a standard date format. Further dynamic behavior pertaining to dates includes functionality to prevent or facilitate entry of partial dates that may include alerts to the user or flagging values within the database and alternate processing of flagged partial dates. In the date examples, the dynamic behavior is triggered by and acts on single fields (the dates). In this case, the dynamic behavior includes facilitating different entry formats, how the data will be processed by the EDC system, and the workflow and data flow associated with entry of valid values and exceptions. In the interventional cardiology study referenced above, the dynamic behavior includes triggering a new form in response to an entered data value. The example illustrates dynamic behavior triggered by indication of a transfusion (an individual field) and acting on multiple fields through creation of a new bleed form with fields for bleed-related lab values and bleed assessment details. Thus, when designing an eCRF, the designer evaluates each field for whether it is a trigger for dynamic behavior either alone or in concert with other fields and whether the desired behavior pertains to an individual field or to multiple fields. [VI]

Dynamic behavior can be confusing to system users because by definition the system behavior differs based on the input. Thus, dynamic behavior should be documented and emphasized in training [VI].19 Dynamic aspects of EDC study builds are covered in subsequent sections of this chapter.

Dynamic behavior may also be used to facilitate study operations such as Source Document Verification (SDV). For example, in 2008 Nahm et al. recommend leveraging the EDC system to support field-level SDV to support point estimation of the source-to-EDC error rate [III].28 A similar process has been recommended for measuring the medical record abstraction error rate.29,30 Other operational processes such as two-rater classification of clinical events and adjudication can also be facilitated through dynamic behavior in EDC systems. Use of EDC functionality to facilitate study operations should be used where the value added outweighs the costs. [VI]

Whether static or dynamic forms are used, use of data standards and form libraries to facilitate reuse of eCRF forms and their features can decrease costs associated with study start-up [VI].8,20

8) Basic Form Features

The most basic function of EDC software is the ability to build and deploy web-based electronic forms for the entry of data and to store the entered data. In most EDC systems, data elements are associated with a data collection structure when they are first added to the system. At this time consideration should be given for use of special characters that could potentially be in use. [IV.] Every time the data element is implemented as a field in a form, the associated data collection structure is used, standardizing it throughout the study. Common data collection structures in EDC systems include free text, many options for semi-structured text, radio buttons, dropdown lists, and checklists. The use of pre-defined answer choices such as those in radio buttons, checklists, and dropdown lists provides constraints during entry and, along with on-screen edit checks, are associated with higher data quality.31

Free text fields allow the user to type in character strings. Free text fields often require specification of a length where the length is sometimes limited by the system or by functionality in downstream data systems. Consistency of responses is challenging with completing free text fields and, for this reason, with the exception of verbatim text to be coded later, they are rarely used for safety and efficacy endpoints. Free text fields are used when constraining the possible responses is not desired, for example, comment fields or collection of site explanations of protocol deviations.

Semi-structured text fields, however, are used often and have many variations. For example, response characters can be limited to alpha or numeric characters. Integer data can be collected and limited to a number of integers. Floating point, i.e., numbers without a fixed number of digits before or after the decimal, and fixed point, i.e., numbers with a fixed number of digits after the decimal, can be used where fractional parts are expected. Semi-structured fields may also constrain the format of entered data, such as parentheses around a phone number area code and a dash between the third and fourth digit or specification of a date format. Semi-structured text fields constrain entered data and increase consistency in the collected data. Semi-structured fields should maximally constrain entered data while accommodating entry of all possible accurate response options. [VI] For example, assuming the system has the ability, the numeric data element heart rate for adult humans in beats per minute should be constrained to an integer value. While there will be disagreement about the range of values possible, the fastest reported human heart rate is 480 beats per minute.32 A range of between zero and 250 beats per minute would not be unreasonable for a field constraint for a study in normal human adults. The aforementioned recommendation requires balancing clinical representativeness for the rare case such as 480 bpm or zero at death with the error-prevention benefit of a tighter range. Consideration should also be given to fields of mixed type. For example, many lab values are typically a numeric field, but some test results may be reported with a >, <, or + symbol, or as ‘positive’, ‘negative’, ‘few’ or ‘trace’.

Radio buttons allow selection of only one choice from a usually short list of options. Radio buttons provide the maximum constraint possible. As such, this data collection structure should be used when the response options are known and standardized. [VI] Implementation of radio buttons should include a mechanism to de-select, i.e., un-select, a previously selected response. [VI]

Like radio buttons, checklists are usually used for shorter lists because all response options are displayed on the screen. Checklists differ from radio buttons in that they allow the user to select more than one option, i.e., check all that apply. Also, like radio buttons, checklists should be used when the response options are known and standardized. [VI] Implementation of checklists should include a mechanism to de-select, i.e., un-select, a previously selected response. [VI]

Dropdown lists allow the selection usually of only one choice and provide a significant amount of constraint. A drop-down list can be used in any situation appropriate for radio buttons but require one or more additional clicks; thus, for short lists, radio buttons are preferred. [VI] Dropdown lists are often used for longer lists to save space on the screen. However, implementing dropdown lists where a scroll is required should be approached with caution because items “below the scroll” may be more likely to be missed. [VI] A variation on both the free text and dropdown list is type ahead functionality where the choice options are restricted by matching the options to the characters typed by the user. Type-ahead functionality in combination with a dropdown list may allow use of very long lists including some clinical controlled terminology sets such as the International Classification of Diseases, or Current Procedural Terminology. Implementation of checklists should include a mechanism to de-select, i.e., un-select a previously selected response. [VI]

Radio buttons, checklists, and dropdown lists collect discrete response options. The underlying data, however, may not always be discrete. For example, an eCRF may collect the following data element, “Does the participant have hypercholesterolemia?” with the response options yes and no, rather than collect the raw cholesterol value. This discretization also represents a clinical diagnosis that admittedly may take into account more than a single lab value, the discretization reduces the information content of the data. The yes/no response would be useless if a different definitional range for hypercholesterolemia were to be applied. Continuous ratio or interval data should be discretized to ordinal or nominal data only after careful consideration. [VI]

9) Required verses not required fields

A field stated as required in a study protocol may or may not be implemented as such in an EDC system. Certain data may be critical to safety or efficacy endpoints of a study. However, there may be times when the data are legitimately not available. Functionality for implementing a field as required in an EDC system differs across systems. In some systems, marking a field as required means that the user cannot save the form or move past the field or form until a value is supplied. This is often called a “hard stop” or a “hard required”. Many EDC systems offer an override feature where a user is prompted to provide a missing value and allowed to still move forward without one. This is sometimes called a “soft required” meaning that a value for the field is expected and if the needed values are not entered, the user can acknowledge or override the alert. The missing value may or may not be tracked depending on the functionality offered by the EDC system. Whereas paper-based data collection mandated a query be sent to the site after missing data were discovered, in EDC this can be a one-step process, with the user confirming that the value is missing and, possibly, providing a reason why. Where data is expected to be missing, it may be appropriate to include response options for the user to indicate a reason the data are not provided, e.g., “sample not collected”, “assessment not done”, “not applicable”, “data not available/not retrievable”, “asked but unknown”, “asked but subject refused to answer”, “actual value invalid”, etc. [VI]

Some data are conditionally required. For example, a pregnancy test result is often conditionally required based on gender, and the specifics of an adverse event are required when an adverse event is indicated as having occurred. Many EDC systems include functionality to implement hard or soft constraints for conditionally required data. Implementing such functionality decreases user data entry time and frustration and should be implemented where it exists. [VI]

10) Calculated Derived fields

Most EDC systems have the ability to derive fields using basic calculations and algorithms. For example, Body Mass Index (BMI) is a calculation dependent on the subjects’ height and weight. A field may be placed in the eCRF that automatically calculates and displays the BMI during entry for the site. Other examples include unit conversions, calculating weight-based drug dosing, scoring rating scales, and applying eligibility and other study criteria to raw data. This functionality is useful in providing decision support to sites. Similar to calculated fields, there are times when it is helpful for a site to have the ability to ‘see’ the data that was entered at a previous study time point, form, or visit. The user should not be able to change the re-displayed data copied into the current visit. [VI] If the original data changes, the calculated or copied value should automatically be updated. [VI] How copied or calculated fields work should be emphasized in training and a mechanism should be in place to indicate to the user how and why data has appeared in a form. [VI]

Algorithms to calculate values may be run at the time of entry or afterward and stored but not displayed. Algorithms to calculate values should be run at the time of entry and calculated values should be displayed if they are used in decision-making at the clinical investigational site or if edit checks are based on the calculated value. [VI] If calculated derived fields are to be used, they should not be editable by the site, to ensure a consistent calculation is performed across the study population. [VI] Calculated derived values for the purpose of data analysis are usually not programmed into EDC systems; rather, they are programmed as part of building analysis data sets. Calculations are the result of algorithms; like other computer programs, procedures should exist to determine the extent of testing and the processes by which testing and documentation of testing should occur [I].12

11) Dynamic fields

Many EDC systems have the ability to conditionally display a field (or not), for example, based on a previously entered data value. In this case, the field on which the condition depends is referred to as a trigger field, and the conditional logic is referred to as the trigger. This is often referred to as ‘skip logic’, ‘skip pattern’, ‘dynamic branching’, or ‘dynamic field’. For example, if collecting whether a procedure was performed, a lead-in question might be asked, “Did the subject complete the procedure?” If the answer is “Yes”, then the eCRF will display questions specific to the procedure. If the answer is “No”, then the eCRF will show only a drop-down select list or text box for the site to record the reason the procedure was not performed. Displaying or activating fields only when a response is valid is a form of constraint and prevents discrepant data from being entered. Such constraints should be implemented where feasible. [VI]

Some systems perform this feature in real time while other systems apply such rules once the form is saved. In the latter case, the functionality is limited to fields on subsequent forms. If the EDC system supports complex dynamic field branching in ‘real time’, sometimes called multi-layered dynamics, then use of the feature to control entry is recommended to catch errors at the earliest possible point or to altogether prevent them. [VI] If the EDC system does not have real-time branching functionality, clear instructions for completion as well as edit checks to catch logically discrepant data should be used. [VI] Dynamic field behavior should be emphasized in training and a mechanism should be in place to indicate to the user how, why, and when a dynamic field appears. [VI]

12) Dynamic Forms

In EDC systems, fields are associated with forms (or item groups or modules of forms), and forms may (Figure 1a) or may not (Figure 1b) be associated with a study visit. Similar to dynamic fields, many EDC systems support dynamic forms, i.e., forms that appear only when a subject meets a certain criterion such as entry of a particular data value. A common example of a dynamic form is a form for prostate cancer screening that only needs to be completed for male participants. Because dynamic forms do not always appear to be available in the EDC system, e.g., the prostate screening form will not appear in the system if a participant is female, they have the potential to confuse users. Dynamic form behavior should be emphasized in training and a mechanism should be in place to indicate to the user how, why, and when a dynamic form appears. [VI]

Use of dynamic forms requires considering how the data on dynamic forms activated in error are handled when the form is subsequently inactivated. For example, consider the case where the sex of a patient is incorrectly entered as female, generating dynamic gender-specific forms; afterwards, the gender is subsequently corrected to male. Different EDC systems handle this scenario differently. Because the origin and all changes to data should be recorded and immutable,11,12 the removal of dynamic forms generated in error and any data entered on them should be permanently tracked by the system. [I]

Dynamic forms such as repeat, event-driven, or unscheduled assessments can be automatically triggered as just described or they can be manually triggered. Some EDC systems support repeat form functionality where a form can be set up to allow site users to manually trigger a new instance of the form. For example, some studies may allow for or require repeat assessments for abnormal vital sign, laboratory, or ECG results. These could be implemented as a repeat form if the EDC system supports this functionality. Using built-in system functionality for repeat forms often also automatically maintains the association of the records for repeated assessment results with the visit in which the original result was measured. However, there is substantial variability in if and how EDC systems support repeatable forms; for example, some systems only allow for repeatable forms in association with unscheduled visits. Manually triggered dynamic forms such as repeatable forms are usually used when the necessity of the additional form instance is (1) dependent on the participant’s course or resulting data or (2) only occurs for a subset of the participants.

These manually or “site user-triggered” forms require consideration at set-up for how to maintain the association of the data with the appropriate time point or visit. In other words, where the EDC system does not or cannot support automatic association of dynamic form data with the needed time point or triggering event, special provisions for referential integrity must be made, such as requiring entry of the number or date of the event or visit with which the data on the new form should be associated. [VI] Further, the appearance of the new instance on the system should be clearly distinct from a visit. [VI]

Many EDC systems have built in functionality for the user to enter the actual date of data collection and associate the date with the data collected. This data is both ‘meta-data’ (data about the data), as well as part of the clinical data in the EDC system. It is often assumed that an assessment or collection date can be derived from the visit date if needed. Thus, physical exam or vital signs forms often lack assessment or collection date, presumably to decrease data entry burden on the site. However, some studies permit assessments to occur within a window of the scheduled or actual visit. These are often implemented as dynamic forms. Omitting a visit date for dynamic forms or forms within the visit results in information loss by losing the association of the assessment data with the actual date on which it was collected. We recommend explicit association of the data to the collection date for better traceability as articulated in ICH E6(R2). [VI]

Determining how to best implement dynamic forms depends on capabilities of the EDC system and complexity of the study. When used multiple times within a study or program, how dynamic forms are triggered and completed and how the content and behavior appear to site users should be consistent to decrease the potential for user confusion. [VI] For example, consistently using dynamic forms in similar situations such as all repeatable forms or event-driven forms, and using the same process for triggering, completing, and correcting them across all form instances will go a long way toward usability.

13) Dynamic Visits

Similar to forms, some visits are expected for a study, i.e., scheduled per protocol, whereas others are conditional, that is some visits are only needed for a subset of the participants and are driven by events that occur during the study. Expected visits are usually described as such in the study visit schedule within the protocol and implemented as such in the eCRF design and EDC system. Like event-driven forms, event-driven visits may or may not occur and are created as needed. Event-driven or otherwise unscheduled visits are not expected to occur for every participant. Many EDC systems support event-driven visits by facilitating their display (or not) based on data entered for a patient or by manual triggering. In an oncology trial for example, when a patient meets a certain criterion he or she may move to a different treatment group with a different set of visits. In some EDC systems, the ability to auto-skip or hide visits is an option. For example, a field is answered in a visit form stating the subject discontinued from the study and the remaining visits are skipped or hidden from the visit schedule and are no longer expected for that subject.

Similar to their form counterparts, referential integrity for repeatable (sometimes called multi-occurring) and event-driven visits often requires special consideration. For example, expected visits have a minimum count of one and a maximum count of one while event-driven visits may or may not occur and they may occur multiple times for a patient within a study; i.e., a minimum count of 0 and often a maximum count greater than one. Providing for referential integrity means that the data collected on dynamic visits should be associated with the correct triggering event as well as the correct time point. To accomplish this, typically these visits should be declared and set-up as such, as noted in Figure 1c and 1d. Dynamics may impact data entry efficiency and system speed so clinical data managers should weigh the benefit versus the possibility of overloading sites with confusing or complicated dynamic functionality when considering dynamic visits. [VI] Like dynamic forms, dynamic visits should be taken into consideration in data status reporting.

14) Decision Support

Decision support is one of the major ways that information systems can add value.7 Automation, discussed previously, is one way of providing decision support. Automatically sending an email notification to the medical monitor when an adverse event indicated as serious is reported is an example of automation. Decision support may leverage automation; for example, the alert to the medical monitor might include additional data and highlight fields pertinent to decision making. Signal detection algorithms that run over data as they are entered or run nightly to detect visits outside the protocol-defined window, disruptions in study medication adherence, or prohibited concomitant medications that trigger automated alerts to the site and site monitor are other examples. Collecting raw data and having the EDC system provide the calculated values in real-time when sites require the calculated values for decision-making and are using the EDC system when making the decision, such as having the EDC system calculate weight-based heparin dosing for a trial in the operative setting is another example. Not all decision support leverages automation. Data status reports and exception reports, discussed in EDC Chapter 3 “Electronic Data Capture – Study Conduct, Maintenance, and Closeout” are examples of decision support without automation as are data visualizations to support signal detection. To be effective, the decision support must be provided at the time and location where the decision is being made. Additional examples of decision support in conjunction with automation provided to study participants and study personnel are described in Cramon et al. (2014) and Mitchel (2008).20,33

15) Form Instructions

From a cognitive engineering standpoint, forms serve as an extension of an individual’s thinking.34 Good form design minimizes cognitive load on the user, i.e., the number or complexity of mental operations that a form completer needs to perform. Thus, the best form completion instructions are those that are not needed because the structure of the form makes correct completion obvious and prevents incorrect completion. In the remote collection of study data, as in the case with EDC, such extensive constraint is often not possible. For example, where a site has only year or month and year for a medical history item, studies would rather have lower resolution than no data. Sometimes the flexibility is needed to account for expected variability while in other situations the lack of system functionality requires it. Form completion instructions fill the gap between the ideal of complete constraint and the reality of needing some flexibility on the user interface and are usually required. Most EDC systems offer options for form completion instructions that go far beyond those available to studies using paper forms. For example, most EDC systems provide additional opportunities to co-locate instructions with the fields to which they pertain via mouse-over or other field-specific and user-activated help. Field-specific instructions should be as close as possible to the field to which they pertain and available to the user with minimal barriers to access.35 While this leans away from provision of form completion instructions as a separate document, instructions are the minimum requirements to support consistency where options exist. [VI] A separate instruction document is better than optionality without clarification. [VI] There is a trade-off between (1) the need to cover options with instructions and (2) the amount of time needed to specify and add them into an EDC system. For more information on form completion guidelines, refer to the GCDMP’s CRF Design and CRF Completion Guideline chapters.

16) Data Integration Set-up

Data independence is the ability to change data values and logical or physical structure of the data without changing the software application that uses the data.36 Most clinical research software today utilizes an independent underlying database management system. Thus, referring to the “clinical database” today usually means the data stored in the database utilized by the EDC software.

The capability to extract data from database systems and transfer the data to another system is a feature of all modern database systems. Yet integrating data from other sources with EDC systems remains a challenge for 77% of respondents to a recent industry survey.9,10 The major areas of difficulty included (1) integration issues, (2) EDC system limitations, and (3) technical demands of support staff.9 At the same time, independent surveys have documented the increase in number of data sources in clinical studies9,37,38 and a doubling of the number of data points collected for protocols between 2007 and 2017.9 The two most recent surveys reported that 100% of respondents’ studies use EDC.9,38 Thus, it is not likely that EDC will be eclipsed in the near future. However, the proportion of study data collected through EDC systems has begun to give way to the increasing volume of data collected through other data sources.10 For these reasons, integration of data from other sources with data collected through EDC systems grows in importance. Though not always the case, assuming that all collected data will be analyzed implies that the data will be integrated at some point between their acquisition and analysis. The best practices of (1) active management through near real-time data acquisition and review (see EDC Chapter 3), and (2) identifying and resolving data discrepancies and operational problems at the earliest practical point in time [III]8,20,21,22,23,24 give heavy weight toward integrating data earlier rather than later in study data processing pipelines.

The functionality needed for data integration and associated data processing should be decided and planned early and set-up along with the study EDC system. [VI] General methods for integration of external data are described in the GCDMP chapter on Integration of External Data. There are multiple approaches to integrating data on studies using EDC for clinical data capture including: a) importing batch data in the EDC system, b) integrating data in a separate repository, c) real-time or near real-time interfaces between EDC system and other systems and d) relying on sites accessing an external system for during-study data needs and integrating the data after the fact. These main approaches are described below.

a) Importing batch data in the EDC system

Most EDC systems offer functionality to import, integrate, process, and display externally collected or externally managed study data such as data from central clinical labs, core labs, ePRO systems, and central reading centers. Some EDC systems support only batch data transfers in favor of live system-to-system interfaces. EDC systems offer different levels of functionality for common processing of imported data such as staging incoming data files, pre-load exception checks, importing the data into the EDC system, edit checks to reconcile imported data with other clinical data, and functionality to track the disposition of identified discrepancies.

Though vendors have argued for the EDC system to serve as the data integration hub for studies and organizations,26,39 such comprehensive integration is seldom the case. Some EDC systems require manual imports of external data. Support for cumulative versus incremental imports varies. Some EDC systems do not support standard data processing functions such as discrepancy identification, discrepancy resolution, and change tracking for imported data. The decision to import and integrate external data depends on the study needs, the functionality available in the EDC system, and the resources required to apply that functionality. Clinical data managers should understand how data collected or maintained outside an EDC system will be used, who will use it, and for what purpose. Into which system changes to external data will be made, which system will maintain the audit trail, and how the audit trail information will be exchanged are just as important. The answers to these questions help determine the extent and timing of data integration. Similar situations exist with exchange of data between EDC systems and common clinical study infrastructure systems such as pharmacy, safety (where safety data are managed in a separate system), and CTMS systems. Thus, the best practice recommended here is to integrate data where the data are needed for site-decision-making and the value of doing so outweighs the costs. [VI]

b) Integration of data in a separate repository

Integration of EDC data with data from other sources in a separate repository outside the EDC system treats EDC as just another data source. Some systems support a repository or warehouse associated with but outside the EDC system for this purpose. Others mention use of a CDMS39 or other products.40 While there are not industry accounts of these in the published literature, they are likely common within organizations. Where data from the other sources are not needed by the sites for decision-making, integration in a repository or study data warehouse may be an acceptable and efficient solution.

c) Integration of EDC systems with pertinent other data sources

Some EDC systems and some external data providers support direct interfaces for real-time or near real-time data exchange. Historically these have taken more effort to set up than using existing EDC system functionality for batch imports. However, standards and technology to support interfaces and configure them within reasonable timeframes are now available. Haak reports such integration with imaging.41 Franklin described a similar approach of setting up point-to-point interfaces with other systems as needed at a large academic institution.42 Lu provides an example of multiple such interfaces with a commercial EDC system to support post-marketing studies.27 Today, direct interfaces are likely more common with infrastructure systems such as drug-supply management, financial site payment systems, and enterprise Sponsor or CRO Clinical Trial Management Systems (CTMSs).

d) External site access to external data

Some vendors involved in the collection and management of data from central clinical labs, core labs, ePRO systems, and central reading centers offer real-time access to an information system where the external data can be viewed by sites. However, these often require a separate login. Integration of external data into an EDC system may be required if the data have direct impact on clinical decisions or study management. Examples include where EDC-based randomization uses scores on patient-completed assessments or when doses are adjusted based on results from an external lab. When data are needed and expected to be used by site users of the EDC system, they should instead be integrated into the EDC system and the value of doing so outweighs the costs. [VI]

Because integration of external data usually includes reconciliation and cleaning of the data, integration of external data into the EDC system also facilitates interim analysis and database lock by these checks having been conducted in an ongoing manner throughout the study. Where it obviates the need for manual entry of data, integration of external data likely saves time and increases data quality. Maintaining a study blind is an additional consideration in integration of external data. Data with the potential to unblind a blinded study, for example, a lab result that might give away the treatment assignment, may require a separate integration strategy to both accomplish ongoing reconciliation and at the same time maintain the blind.

Setting up an EDC system to receive imported data usually requires creating data fields within EDC system to receive and store the data to be integrated as well as the algorithms through which the incoming data are parsed, transformed if necessary, and written to the destination fields. Because data integration requires algorithmic or manual manipulation of data, the planned data integration should be fully specified, tested, and traceable [I].12 Detailed considerations and practices for designing, specifying, managing, and assuring the quality and compliance of externally managed data can be found in the GCDMP chapter titled Integration of External Data.

17) Data Validation Checks (Edit Checks)

Data validation checks are algorithms that are used to screen data for invalid, questionable, or anomalous values. They are sometimes referred to as edit checks, query rules, or error checks. Data validation checks that identify problems as data are entered in EDC systems are also referred to as on-screen checks. EDC systems vary widely in the workflow related to query processing and status, for example whether on-screen checks that fire before data are committed to the database are tracked as discrepancies. A thorough understanding of the functionality and associated metadata is required to optimize processes using on-screen checks. Edit checks should be developed concurrently and iteratively as part of the eCRF with the eCRF specifications finalized prior to the edit check specifications. [VI]

On-screen checks enable enterers to address the flagged values sooner if not immediately, ideally during the assessment or when the source of the information is at hand. Preventing errors or catching data problems earlier reduces costs. It is widely accepted that there are significant increases in total cost the further downstream errors are caught. This concept has become known as the 1-10-100 rule and is described in three stages, as error prevention is ten-fold less than correction where correction is yet again ten-fold less expensive than remediation of failures due to uncorrected errors.43 Thus, in a risk-based approach, costs associated with prevention can be weighed against cost of correction and damages from failures due to uncorrected errors. Further, use of on-screen edit checks with single-entered data is associated with data quality similar to that of double entered data.44 On-screen checks should be used with EDC to the extent that benefit outweighs cost associated with, for example, human safety, re-work, and regulatory delays [III].44

Operationally, on-screen checks in EDC systems increase the immediacy with which Data Managers, study Monitors, or in-house Study Coordinators or Site Managers can become aware of and review unresolved discrepancies and interact with investigational sites to resolve them. Such data-driven contact by phone with site staff promotes an active approach to decreasing elapsed time to complete and clean data. See EDC Chapter 3 for more information on active study management. Getting data in and clean faster has always been a major part of the value proposition of EDC. At the same time, because EDC broadened the number and variability in users from internal personnel to users at the clinical sites involved in a study, the requirements for system training and usability are significantly increased.

a) Types of Edit Checks

Most EDC systems use a rules-based approach to identification of discrepant data and have functionality for authoring, storing, managing, executing the rules and tracking the lifecycle of identified discrepancies. Edit checks in EDC can be classified into two broad categories, “hard” edits and “soft” edits. Soft edits identify discrepant data and usually prompt the site for data correction but allow the data to be confirmed as is and saved so that entry can continue. Whereas hard edit checks also identify discrepant data but prevent the identified data from being saved. In some systems, the form itself cannot be saved with open hard edits. In other systems, hard edits do not produce an alert that a user can “confirm as is” or override. Thus, hard edits are sometimes called non-actionable because the user cannot acknowledge the check and proceed; the only permissible action is to enter data that conform to the requirements. Data type checks (sometimes called browser checks because they almost always run real-time in the browser) are commonly implemented as hard edits. For example, if a user attempts to enter an alphabetical character in a numerical field, the check will not accept the data and if the field is required, the form will not save until conformant data are entered. Another type of a hard edit is a property check. Property checks prevent entering data that do not match form and/or item property settings of the field documented during system set-up. For example, when a field requires a number with 2 decimals, a value of “3” cannot be entered. Instead, a number with two digits to the right of the decimal must be entered to satisfy the property requirement. Without satisfying the property requirement, if the field is required, the form will not save until conformant data are entered. For this reason, hard edits are usually used for non-feasible scenarios such as physically impossible values while soft edits are used to identify data values that are unexpected or unlikely but which could occur. These considerations are more important in the context of EDC because the data enterer is at a clinical investigational site. Because failure of a hard edit prevents forward progress with the task of data entry, users are incentivized to enter a data value that will “pass the check”. Thus, we do not recommend use of hard edits in EDC. [VI] Many systems have evolved and now allow all checks to be implemented as soft edits and allow entry of otherwise invalid data along with a reason for the non-conformant data.

b) Lifecycle Documentation and Management of Edit Checks

Because data entry is done by investigational sites with EDC, the user interface and usability become more important. If a discrepant data value is identified by an edit check, a real-time indicator such as a color change, an audible alert, haptic feedback, or a change in iconography on or near the discrepant data is most helpful to the user. [VI] Similarly, an explanation of the discrepancy should be readily available, whether a single data point error or an erroneously fired query that generates multiple errors. [VI] In addition to real-time cues to the user, a lifecycle record for all detected discrepancies best meets the traceability requirements as stated in ICH E6(R2) [I].11 Such a record provides a mechanism through which changes to data can be reconstructed from the original entry, a prompt (or not) regarding a discrepancy, and changes to data. In the absence of such a record, it is not possible to distinguish prompted versus unprompted changes to data following the initial entry. Further, a record of open discrepancies facilitates reporting and active management of data collection and cleaning. EDC system functionality for lifecycle documentation and management of discrepancies varies.